A Decade of Social Bot Detection

- Authors: Stefano Cresci

- Publication, Year: Communications of the ACM, October 2020

- Link to Article

A Decade of Social Bot DetectionSummary VideoKey InsightsSummary of SuggestionsIntroductionThe Social Bot PandemicPrevalence of BotsGrowth of ResearchThe Dawn of Social Bot DetectionProblems with the ApproachThe Issue of Bot EvolutionBlurring Lines of "Real" and "Fake"The Rise of Group ApproachesBot Detection Techniques Over the Past 10 YearsA Glimpse into the Future of Deception DetectionAdversarial Machine Learning to the Rescue?Adversarial Bot DetectionGenerative Adversarial NetworksGeneral Generative Adversarial Network ModelChallenge for the Future of GANsOpen Challenges and the Way AheadSummary of Suggestions

Summary Video

Key Insights

- Social bots are a long studied, yet unsolved, problem in our online social ecosystems and several detection trends appeared through time. The latest and most-promising advance is represented by group-based detectors.

- Deception detection is intrinsically adversarial. The application of adversarial machine learning can give us an edge in the fight against all forms of online manipulation and automation.

- Recent advances in computing and AI (for example, deepfakes) make individual bots indistinguishable from legitimate users. Future efforts should focus on measuring the extent of inauthentic coordination rather than on trying to classify the nature of individual accounts.

Summary of Suggestions

Future deception detection techniques should:

- Focus on identifying suspicious coordination independently of the nature of individual accounts

- Avoid providing binary labels in favor of fuzzier and multifaceted indicators

- Favor unsupervised/semi-supervised approaches over supervised ones

- Account for adversaries by design

In addition, part of the massive efforts we dedicated to the task of detection should also be reallocated to measure (human) exposure to these phenomena and to quantify the impact they possibly have.

Introduction

In the aftermath of the 2016 U.S. elections, the world started to realize the gravity of widespread deception in social media. Following Trump’s exploit, we witnessed to the emergence of a strident dissonance between the multitude of efforts for detecting and removing bots, and the increasing effects these malicious actors seem to have on our societies. This paradox opens a burning question: What strategies should we enforce in order to stop this social bot pandemic?

The Social Bot Pandemic

There is still no agreed upon social bot definition. The diversity of definitions is a result of different fields studying the problem as well as the diversity of behavior that bots display. For example:

- Computer scientists and engineers tend to define bots based on a technical perspective

- Social scientists tend to focus on social and/or political implications and thus may define bots differently

- Accounts that driven both by human interaction as well as algorithms have been defined as human-assisted bots as well as bot-assisted humans

Bots are either malicious or benign and researchers tend to focus on malicious bots.

The reason why becomes obvious when you look at how Stieglitz et al. categorizes bots by their intent and capacity to imitate humans

- Malicious bots tend to focus on imitating humans and often influencing their behavior

- Benign bots tend to be obvious, do not imitate humans, and even may be helpful (e.g. news and recruitment bots, bots utilized during emergencies, etc.)

Prevalence of Bots

- In 2017, it was estimated that 15% of accounts on Twitter were bots [source]

- In 2019, it was estimated that 11% of accounts on Facebook were bots [source]

Even more worrisome, when strong political or economic interests are at stake, the presence of bots dramatically increases.

- In 2019, it was estimated that 71% of accounts mentioning U.S. stocks were likely to be bots [source]

- Similar results were reported with respect to cryptocurrency [source] and conversation related to the COVID-19 "infodemic" [source]

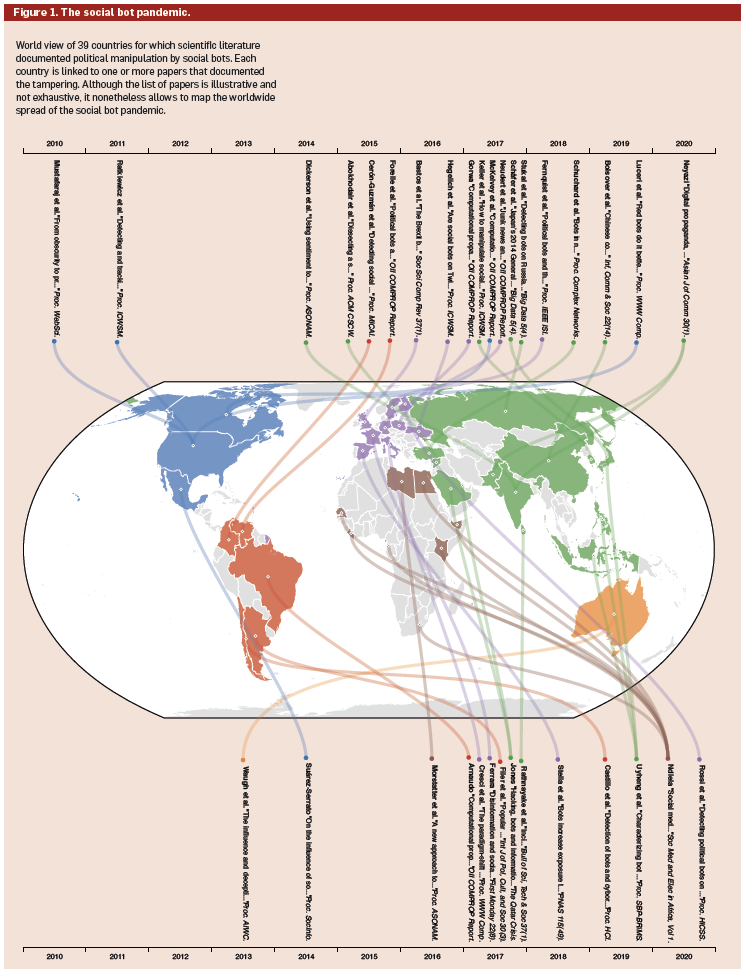

Figure 1 below illustrates the global nature of social bots attempt at influencing the world, however, their impact is not always real.

- Some studies have reported on social bot's pivotal role for increasing disinformation’s spread, polarization, and hateful speech ([source], [source])

- Others claim that their effect on the world is not necessarily significant, and that human beings play a larger role in the spread of false news [source]

The ubiquity of social bots is also partly fueled by the availability of open source code...

Number of Twitter bot repos on Github...

The looming picture is one where social bots are among the weapons of choice for deceiving and manipulating crowds. These results are backed by the same platforms where information operations took place—namely, Facebook ([source]), Twitter ([source]), and Reddit ([source])—that banned tens of thousands accounts involved in coordinated activities since 2016.

Growth of Research

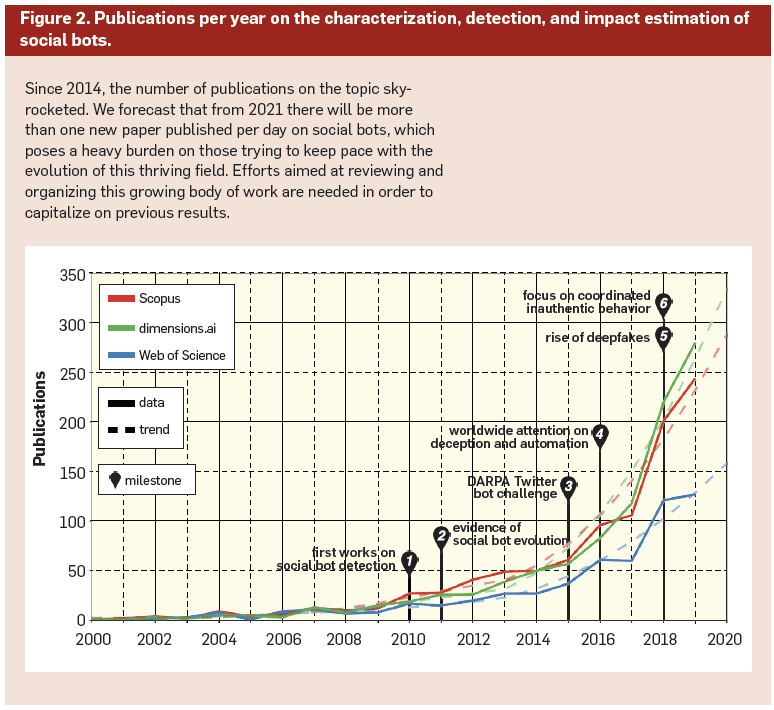

The growth of the problem has, fortunately, led to the growth of work seeking to detect social bots (Fig. 2)

Perhaps even more importantly, the rate at which new papers are published implies that a huge worldwide effort is taking place in order to stop the spread of the social bot pandemic. But where is all this effort leading?

The Dawn of Social Bot Detection

Many early attempts focused on the analysis of individual accounts. (Figure 3A below)

The key assumption in this approach is that bot accounts are clearly different from non-bot accounts

- Typically use off-the shelf, general-purpose classification algorithms and on designing effective machine learning features that separate bots from legitimate accounts.

Problems with the Approach

Systems which focus on only a few characteristics can be easily gamed, however, even more sophisticated algorithms which take into account thousands of account features can run into issues.

Training machine learning classifiers requires training data. Unfortunately, ground-truth data is hard to come by

Most "ground-truth" data that is utilized is simply hand-coded by humans

Issues arise from this approach for at least two issues

- Different coders may utilize different methods, which may lead to different results/conclusions

- Humans have been proven to suffer from several annotation biases and largely fail at spotting more sophisticated bots — only correctly annotation 24% of these accounts [source]

Many of these classifiers return a binary classification. However, many malicious bots display a mixture of bot-like and human-like behavior

The evolutionary nature of social bots creates an endless cat and mouse game

The Issue of Bot Evolution

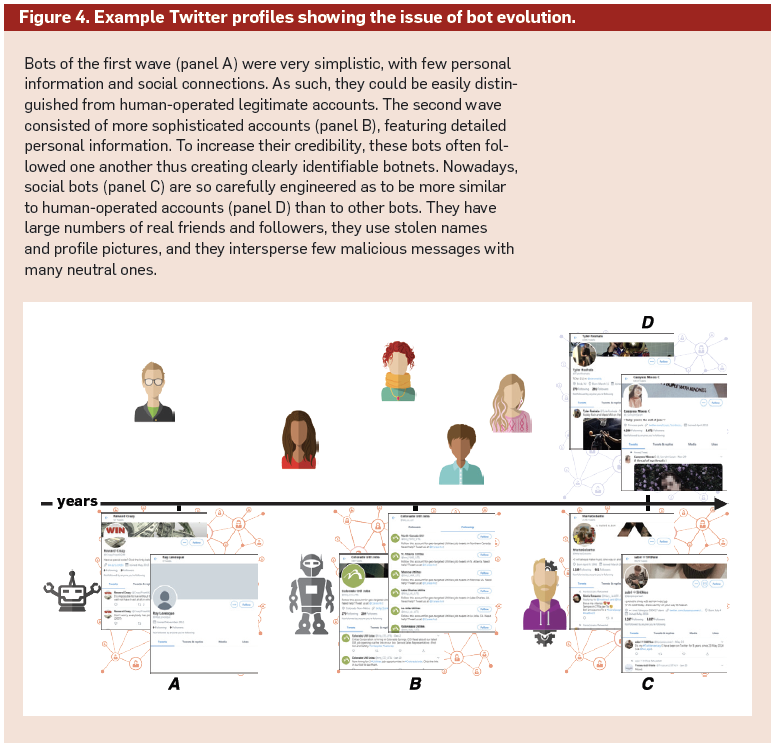

Bot Evolution — the process by which the creation of new sophisticated bot detection methods "force" the creators of malicious bots to design more sophisticated malicious social bots.

- This creates a vicious circle in which detection gets increasingly more difficult and the ground truth data utilized to train previous machine learning classifiers becomes obsolete

- This paper offered a classifier for detecting evolving social bots on Twitter

- Unfortunately, this paper showed that more recent and sophisticated bots could not be detected by this classifier. (Fig. 4)

Newer bots are more similar to legitimate human-operated accounts than to other older bots.

Why?

Cyborgs exist halfway between traditional concepts of bots and humans

These cyborgs are now using AI to create text (e.g., via the GPT-2 and 3 deep

learning models [source]) and profile pictures (e.g., via StyleGANs deep learning models [source])

Blurring Lines of "Real" and "Fake"

Kate Starbird discusses how the lines are blurring between what is "real" and "fake" online in this Nature article.

- She mentions that what we see now may just be the tip of the iceberg and that a new reality littered with much more sophisticated deception may be on the way, unless we figure out a way to address these issues properly

As one form of “social Web virus,” bots mutated thus becoming more resistant to our antibodies. The social bot pandemic gradually became much more difficult to stop. Within this global picture, dichotomous classifications — such as human vs bot, fake vs real, coordinated vs not coordinated — might represent oversimplifications, unable to grasp the complexity of these phenomena and unlikely to yield accurate and actionable results. Ultimately, the findings about the evolution of online automation and deception tell us the naive assumption of early, supervised bot detection approaches — according to which bots are clearly separable from legitimate accounts — is no longer valid.

The Rise of Group Approaches

Around 2012-2013, a number of group-based approaches began to pop up.

- The rationale for targeting groups of accounts as opposed to individual accounts is to draw upon the fact that bots often coordinate their behavior, creating a botnet which interacts with itself to amplify it's overall impact and influence

- Large groups of accounts offer more data for AI algorithms

- In 2018, Facebook and Twitter both acknowledged the importance of focusing on coordinated inauthentic behavior

Most group detectors have proposed shifting from general-purpose machine learning algorithms (i.e. SVMs and decision trees), to ad-hoc algorithms that are designed for detecting bots, in an effort to boost detection performance.

Group detectors are also typically based on unsupervised or semi-supervised approaches.

- This will help overcome the generalization problems of supervised detectors that limit the availability of exhaustive and reliable training datasets.

Bot Detection Techniques Over the Past 10 Years

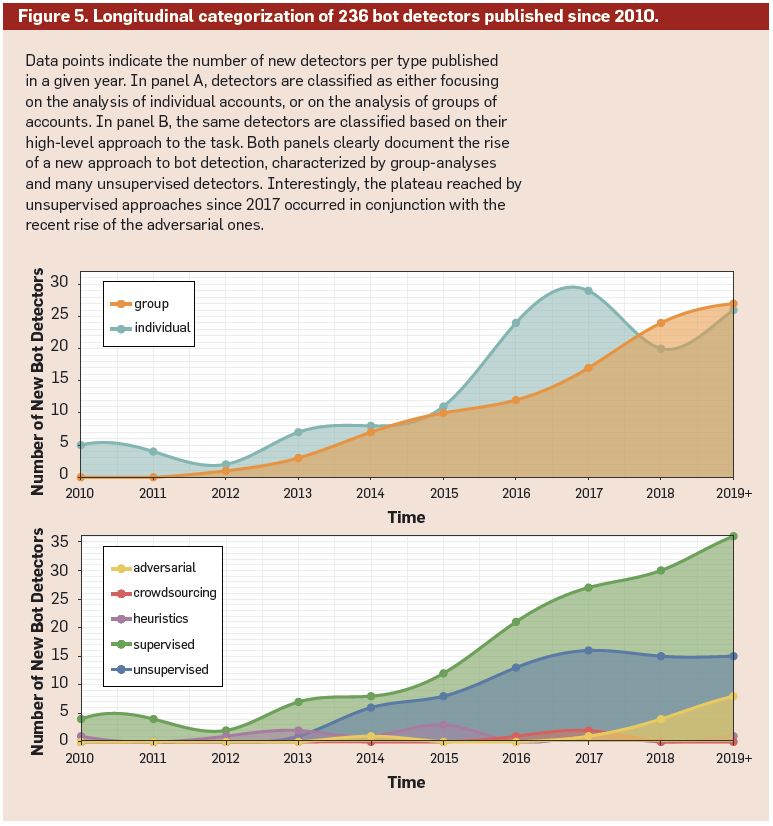

The authors conducted a survey of 230 bot detection papers, see Figure 5 below for their findings.

- Clearly, group detection methods are steadily on the rise since 2012/2013

- We also see that was a rise in unsupervised methods which, interestingly, plateaus in 2017 when adversarial techniques start to grow in popularity

- Supervised methods also appear to grow consistently which the authors suggest may be a result of the natural language processing community which typically rely on NLP text analysis and binary classification methods

- In contrast they highlighted the complex networks community as typically favoring a graph-based approach

A couple of examples are provided within the original text to provide clarity on how the different types of methods are represented by the survey's classification. Specifically, see the end of page 79 and the beginning go page 80 for these details.

As a consequence [of certain communities favoring certain methods], some combinations of approaches—above all, text-based detectors that perform unsupervised, group analyses—are almost unexplored and definitely underrepresented in the landscape of existing bot detectors. In the future, it would be advisable for multiple efforts to follow the directions that have been mostly overlooked until now.

A Glimpse into the Future of Deception Detection

The authors then move on to cover the potential areas of improvement in deception detection. Their comments are based on the following two observations

Both the individual and group-based methods are reactionary

- i.e. they typically respond to bad actors by gathering data on them, and then building models on those bad actors behavior, networks, etc.

In other words, scholars and OSN administrators are constantly one step behind of malicious account developers.

Most of the machine learning methods utilized are designed to by operated within environments that are stationary and neutral — both of these assumptions are violated with respect to social bot detection

- Stationary — violated due to the constant evolution of bots over time

- Neutral — violated due to the fact that malicious bot creators are actively trying to avoid detection

Adversarial Machine Learning to the Rescue?

All tasks related to the detection of online deception, manipulation and automation are intrinsically adversarial.

Adversarial machine learning is a paradigm specifically designed for applications where what you're trying to detect is actively trying to fool the learned models that are doing the detection.

High-level Goal: study vulnerabilities of existing systems and possible attacks to exploit them, before such vulnerabilities are effectively exploited by adversaries. Early detection of vulnerabilities can then be utilized to build more robust detection systems.

- One practical way to do this is by actively inputing instances to your detection system which are designed to fool the system — i.e. increase ML errors.

Adversarial Bot Detection

In adversarial bot detection, researchers create meaningful adversarial examples which they use to test existing bot detection methods.

Adversarial examples might be:

- Sophisticated existing bots and trolls

- Bots that do not exist, their behavior simulated (seen in the link above)

- Bots developed ad-hoc for the sake of experimentation ([source])

These example above are driven by the creativity of researchers, however, in the future they could be driven by cutting edge AI by utilizing generative adversarial networks

Generative Adversarial Networks

Making Machine Learning Robust Against Adversarial Inputs from CACM on Vimeo.

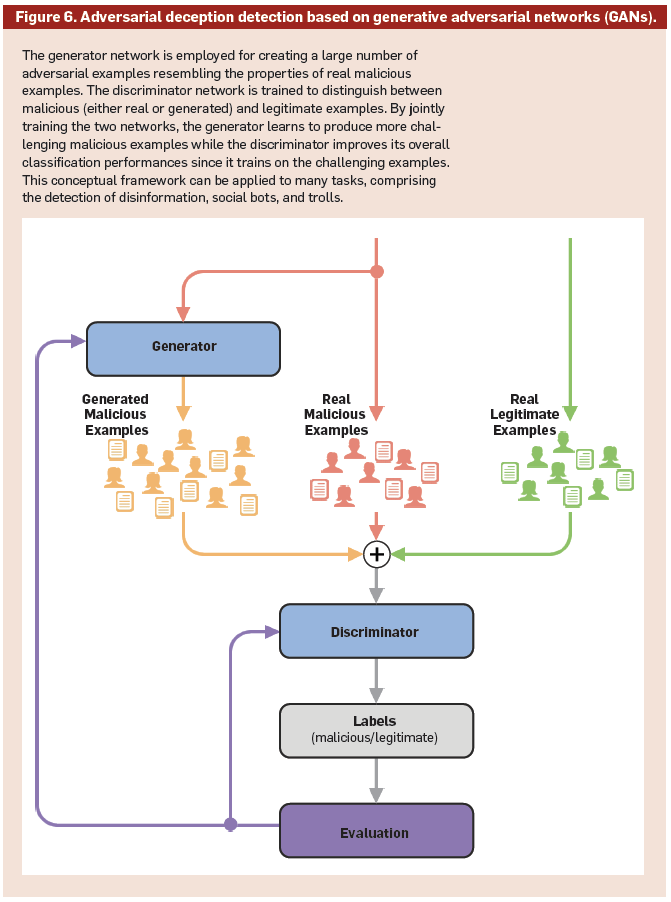

Generative adversarial networks (GANs) are a powerful machine learning framework where two competing deep learning networks are jointly trained in a game-theoretic setting. [source]

In particular, a GAN is composed of a generator network that creates data instances and a discriminator network that classifies data instances.

- See Wu et al. for examples of an early GAN approach on Twitter

Goal of Generator — to create synthetic data instances that resemble real data

- Evaluated based on its ability to induce errors within the discriminatory network

Goal of Discriminator — to classify input data as either synthetic or organic

- Evaluated based on its binary classification performance

… the generator of a GAN could be used as a generative model for creating many plausible adversarial examples, thus overcoming the previously mentioned limitations in this task and the scarcity of labeled datasets.

This provides us with two very useful and practical applications of GANs with respect to social bot detection

- Creating much needed training data

- Testing and improving existing detection models by detecting their weaknesses

This paradigm has never been applied to the task of social bot detection, but it was tested with promising results for related tasks, such as that of fake news generation/detection. [source]

General Generative Adversarial Network Model

Challenge for the Future of GANs

This research is in it's infancy and researchers need to invest their time to develop it

Need to develop techniques for:

- Creating many different kinds of adversarial examples

- Evaluating whether these examples are realistic and representative of future malicious accounts

Open Challenges and the Way Ahead

- Organization— The large body of social bot detection research needs to be organized.

- Standardization — Standard benchmarks, frameworks, and datasets should be developed.

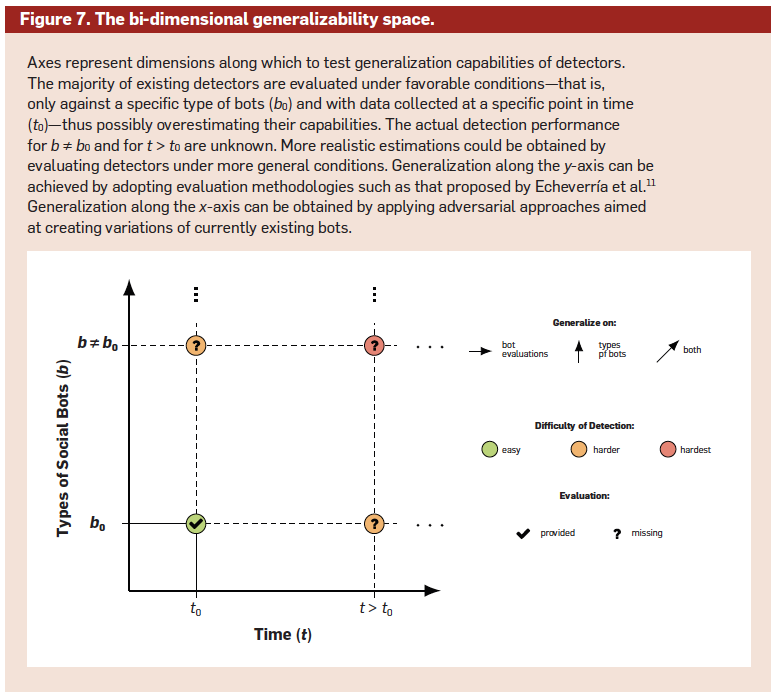

- Generalizability — This aspect of classifiers performance have been largely overlooked. Creating deception detectors which generalized across time as well as type of bot are needed (see Figure 7 below)

- Create social bot repositories

… to reach this ambitious goal, we must first create reference datasets that comprise several different kinds of malicious accounts, including social bots, cyborgs and political trolls, thus significantly adding to the sparse resources existing as of today.

Develop a diverse set of methods for developing adversarial examples

- This also requires methods of quantifying the value of adversarial examples — e.g. based on their novelty, diversity, how genuine they would appear to humans, etc.

Summary of Suggestions

Future deception detection techniques should:

- Focus on identifying suspicious coordination independently of the nature of individual accounts

- Avoid providing binary labels in favor of fuzzier and multifaceted indicators

- Favor unsupervised/semi-supervised approaches over supervised ones

- Account for adversaries by design

In addition, part of the massive efforts we dedicated to the task of detection should also be reallocated to measure (human) exposure to these phenomena and to quantify the impact they possibly have.

Notes by Matthew R. DeVerna